How Can We Help?

AI Suite

Installation

To install the plug-in just proceed with this tutorial Installation procedure

Requirements

This powerful extension depends of these three main systems:

- OpenAI API. You need to subscribe at https://chat.openai.com/auth/login. Click signup and complete registration.

- Hugging Face Transformers Library(js). We use public models for NLP tasks like spam and toxic content classification.

- Google TensorFlow Library(js). The extension uses their JS version to train and use models in the browser.

Hugging face and Tensorflow can be used free without need to get credentials. So you can start just getting an OpenAI API.

Please use the data generated by the models exclusively as a component of your marketing and financial decision-making toolkit. However, do not rely solely on predictions when making investment decisions.

We recommend including a disclaimer page for the Chatbot to inform your customers that the provided information may be imprecise, incorrect, or incomplete.

Advise customers to avoid entering sensitive information into the Chat or Prompt system, including credit card details, financial data, passwords, or personal private information. Please note that this data passes through OpenAI servers, and we cannot guarantee its use for improving their systems.

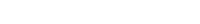

OpenAI

Important : After signup please get your API key and limit your account as instructions below.

Browse Limits link

This prevents abusing your credit.

So you will be warned when your credit reaches half.

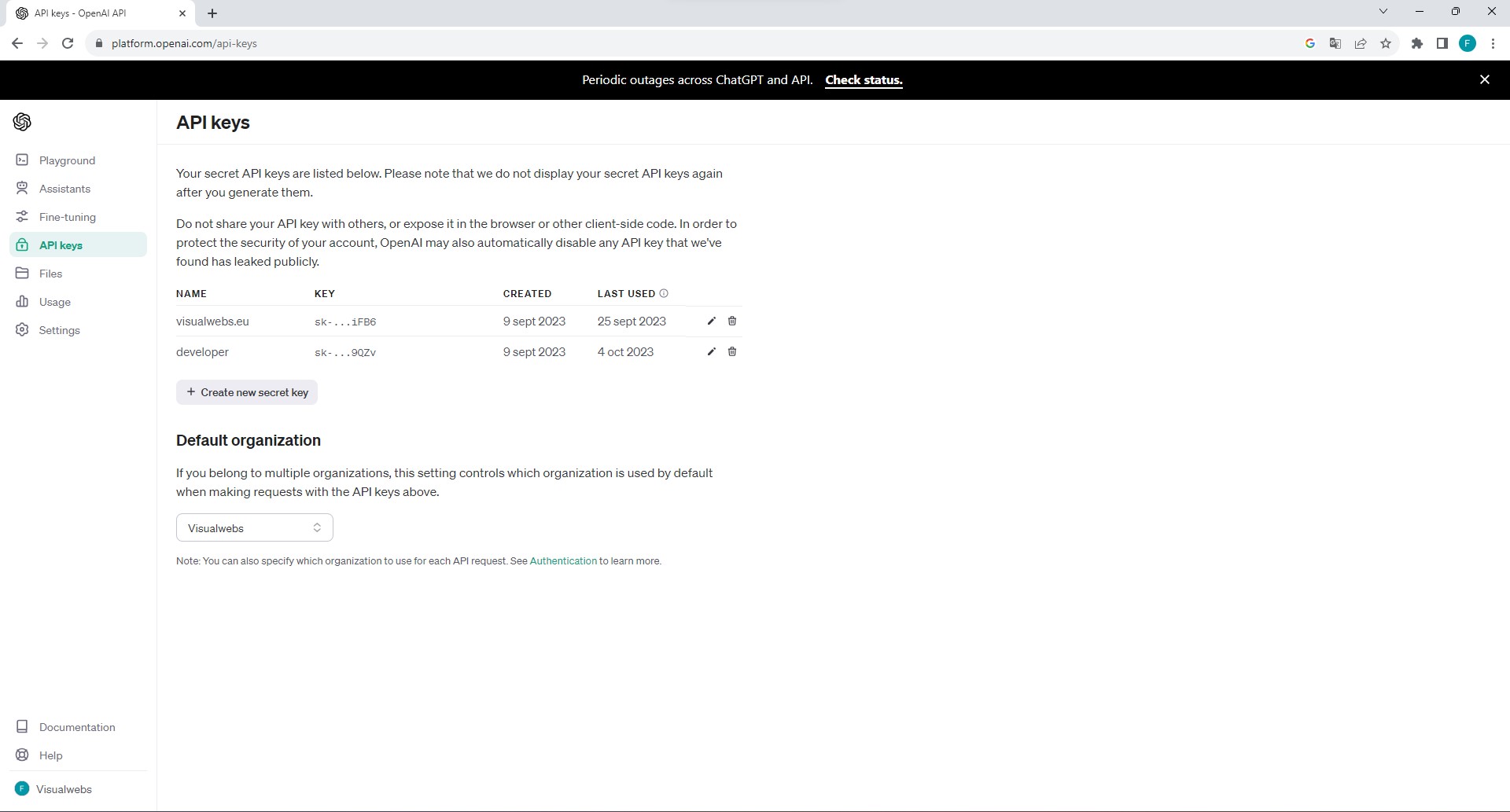

API key generation

Generate and write down the KEY in a secure place, like your PC.

Browse API keys link

Click on "Create new secret key".

It´s not able to see anymore and you need to create a new one if you loss it.

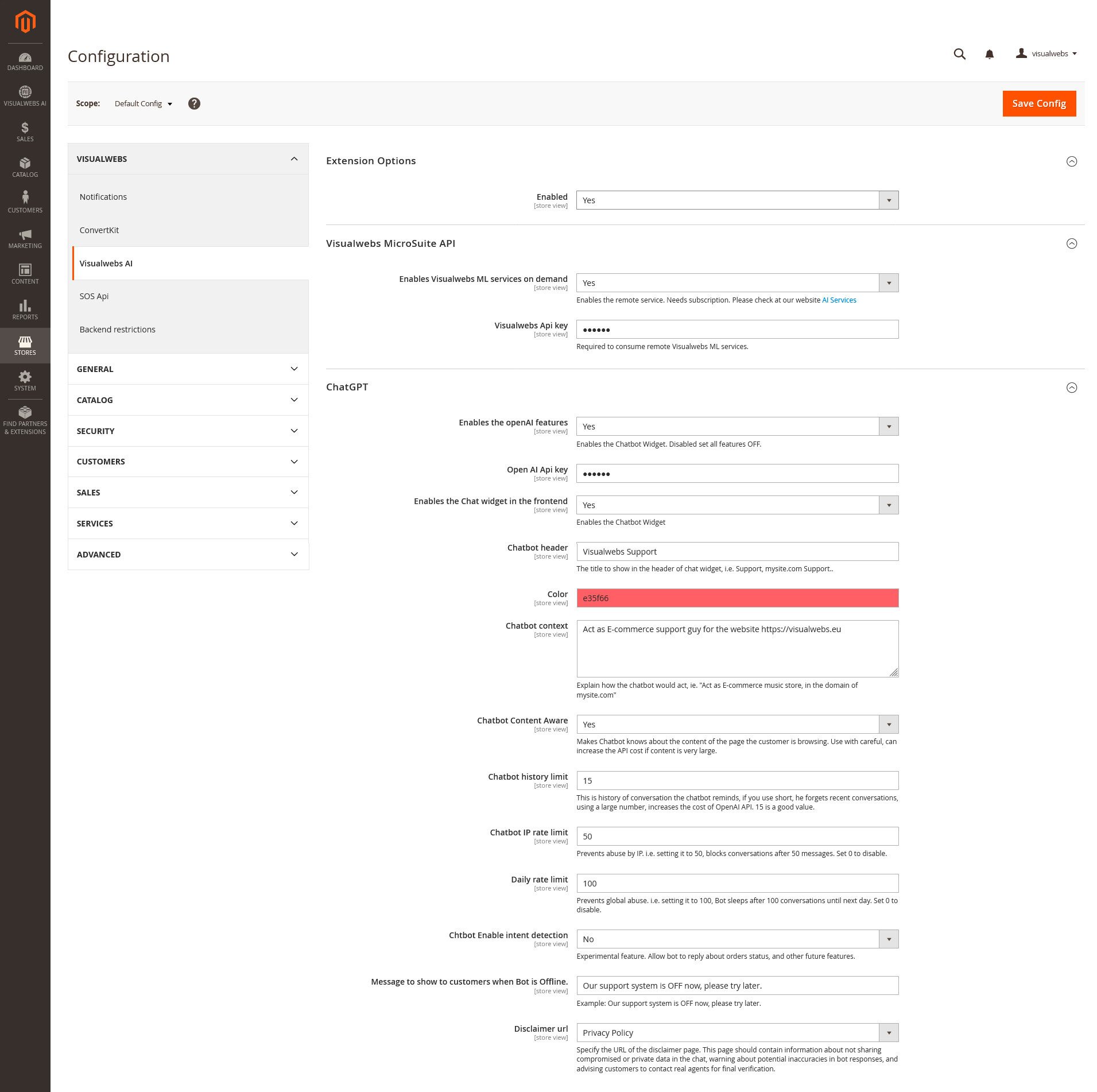

Extension settings

Check the instructions below.

Needs a valid subscription and API Key.

Fill your API Key here.

Enables OpenAI service related features like Chatbot and Prompts.

Fill your openAI Key here.

Shows the Chatbot in your store.

Describe to the Chatbot the context, the "personallity".

Like product page, category, or Cms page.

Use with precaution, long content increases the price of API calls.

Around 15 is standard for short conversations.

Increase if you want reminds older conversations.

This limits the messages in a single day for the same user/IP.

Set to 0 for disabling the limitation.

Set 0 to disabling this limit.

Experimental feature.

Fill with the text to show users when Chatbot is unaivalable.

Select the privacy policy link where the users can read conditions for Chatbot.

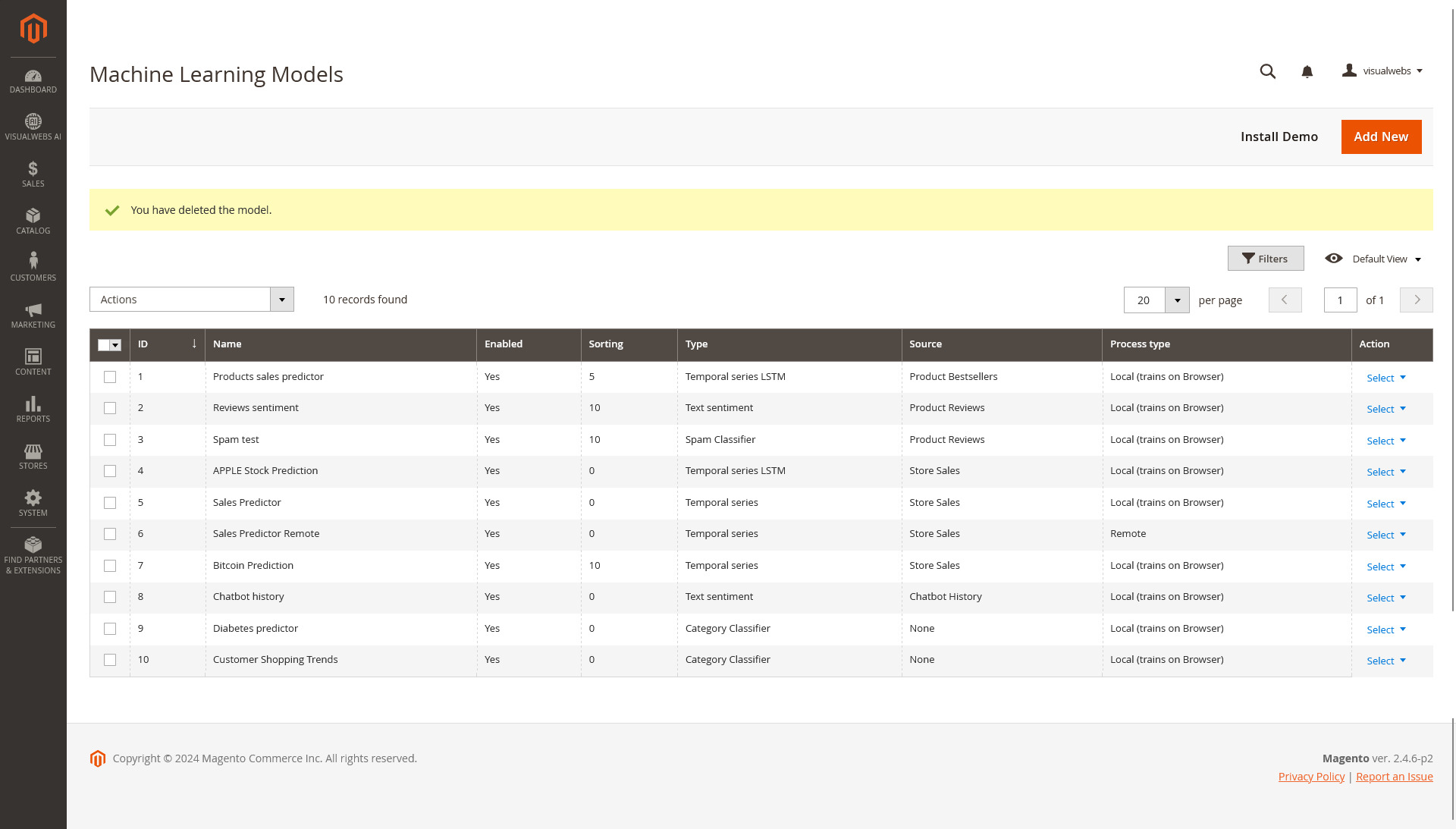

Machine Learning

This feature allow train models using external csv files, or your E-commerce entities like sales, reviews, to make predictions.

Model setup

For start with the utility, almost 1 model should be created.

The most simple way to see models working and understand the settings is to install the demo data at the top of "ML models" page.

Create a new model

Install demo models

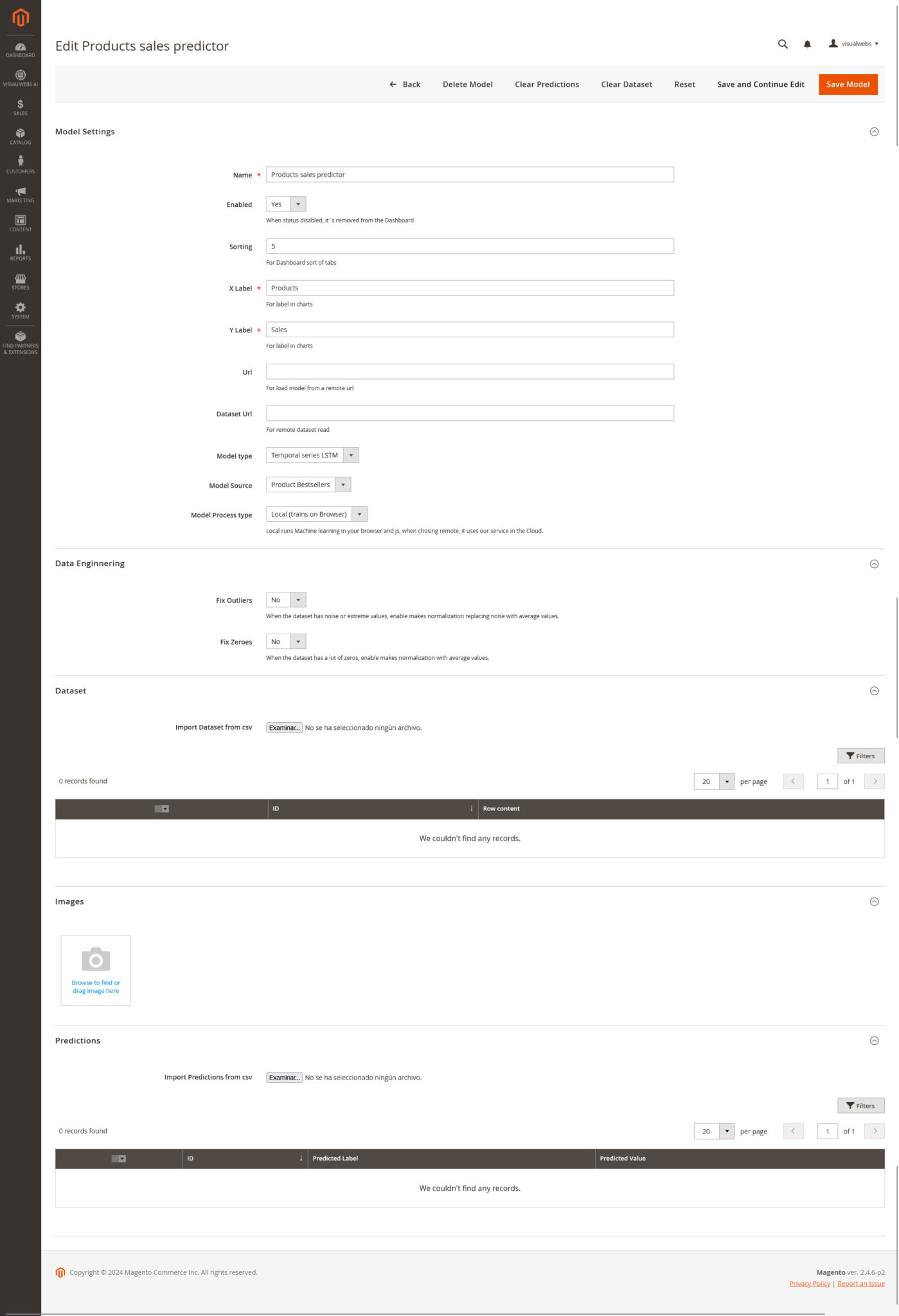

Edit settings

The model needs settings to define the architecture, the source and type.

Return to models listing.

Use with caution.

Remove the prediction data uploaded in the "Predictions" section of the model form.

You can re-upload again if you delete by error.

Helpful if you want redo changes.

For save and back to this form.

Or after upload predictions or dataset, to complete the update of the model.

Fill your desired name

When enabled, is visible in the Dashboard.

The sorting for display models in the Dashboard. Low numbers, displays first.

The label to display in the X-axis on chart. Usually can be "Features","Time".

The label to display in the Y-axis on chart. Usually can be "Price","Sales", the prediction objective.

Usually leave this field as is. When you save the model, the url is stored here.

Is better and more secure to import the dataset using the import button in the "Dataset" section.

LSTM usually is better than no LSTM.

The Categorize classifier is for forecast between a list of 2 or more options.

The text sentiment and Spam are just trained models to detect true/false

Can be used for existing dataset or Magento internal entities like reviews.

The source of data. Use none if you're working in a custom dataset.

Otherwise, use the other options that are mapped to Magento internal data, like sales, reviews, chatbot history.

Note you need subscribe and fill your API key in "Visualwebs Microsuite API", extension config.

Imagine you have a dataset with 10,15,17,1000 as store sales.The value 1000 if is an error in the dataset would confuse the model.

With Fix Outliers enabled, this very high or very low numbers, will be converted to the median of the other values.

Imagine you have a dataset with 10,15,17,'','',12,'' as store sales.

With Fix Zeroes enabled, those empty values will be converted to the median of the other values.

Import your csv here, the data is stored in the database. CSV should contain field names in the first row.

When dataset data is imported, it shows a preview here.

For example, detect your face for authentication purposes.

These type of models are in development roadmap and planned to release on 2024.

Provide the fields name in the first row and would a dataset with 2 fields, the time in the first column and the prediction in the second.

Example of csv content:"time","prediction","2010-01-01","1300","2010-01-02","1450"...

Here you will see a preview of predictions data.

Train a model

The model needs to train usually once a month, this is a manual feature, then the predictions include the most recent data.

In this video you see the entire process of train. If you need an automated solution, please read the following section.

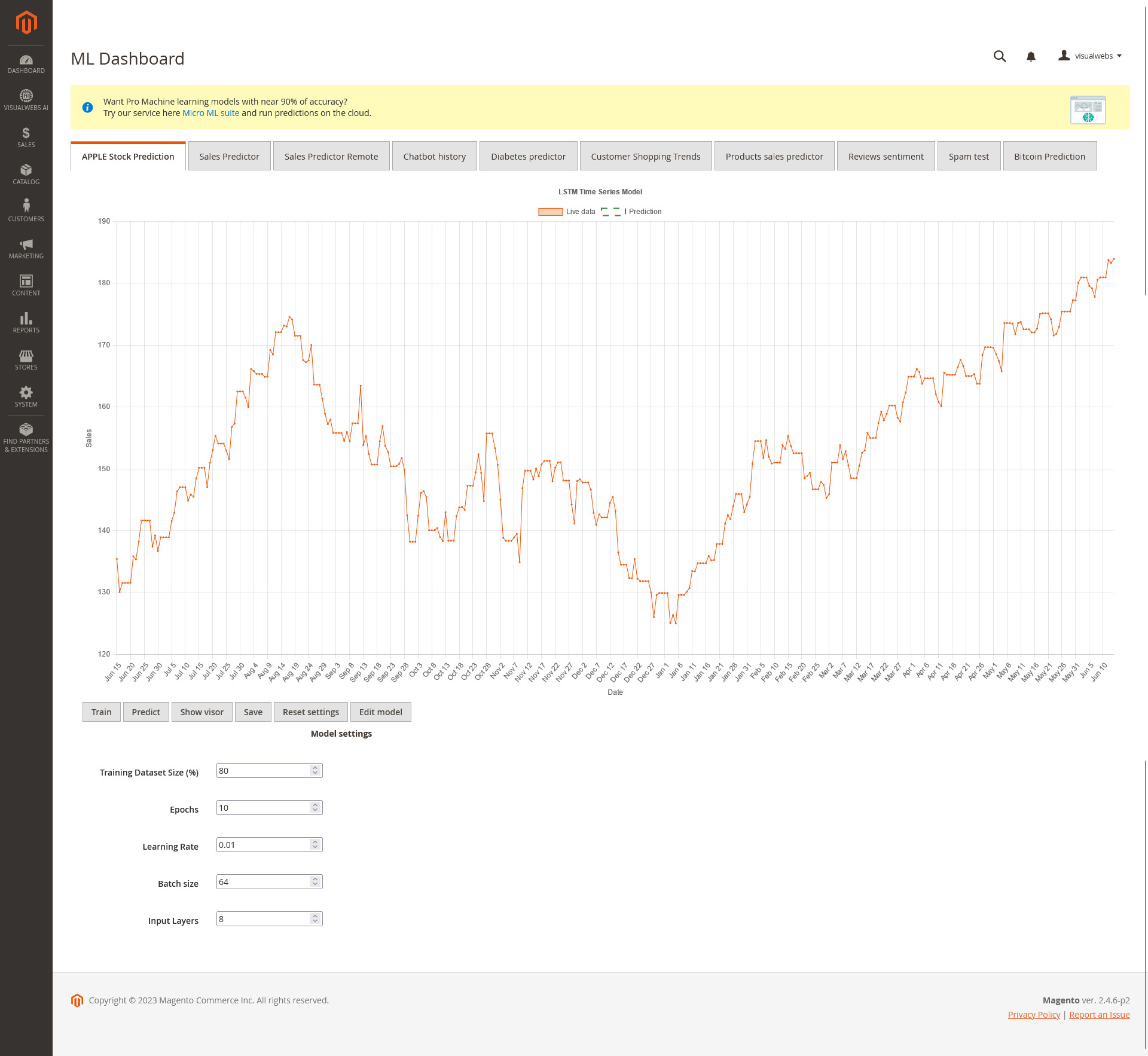

Here you see all enabled models sorted by "sorting" field ascendant.

Every model in its own tab.

Details of model and chart legend.

Y-axis label

X-axis label

Start here. When you are happy with "Model settings" click it to train the model.

Shows Tensorflow charts. For advanced users.

Stores the model training in Magento media folder, for later use without need to re-train.

Resets the "Model settings" to the default values.

Goes to model editor.

Settings are for train the model. Very important for get good results.

Usually this is around 70-80%. Use 100% when you get a good setting and want use the full live data.

For classifier models, set to 100%.

A good value for production models would be from 100 to up.

Most used values are 0.1, 0.01, 0.001

Common values are in range of 2-128, please don´t set high values because affects performance of browser.

The number of nodes (neurons) in the input layer is determined by the dimensionality of the input data.

Try to use a value that matches the number of features.

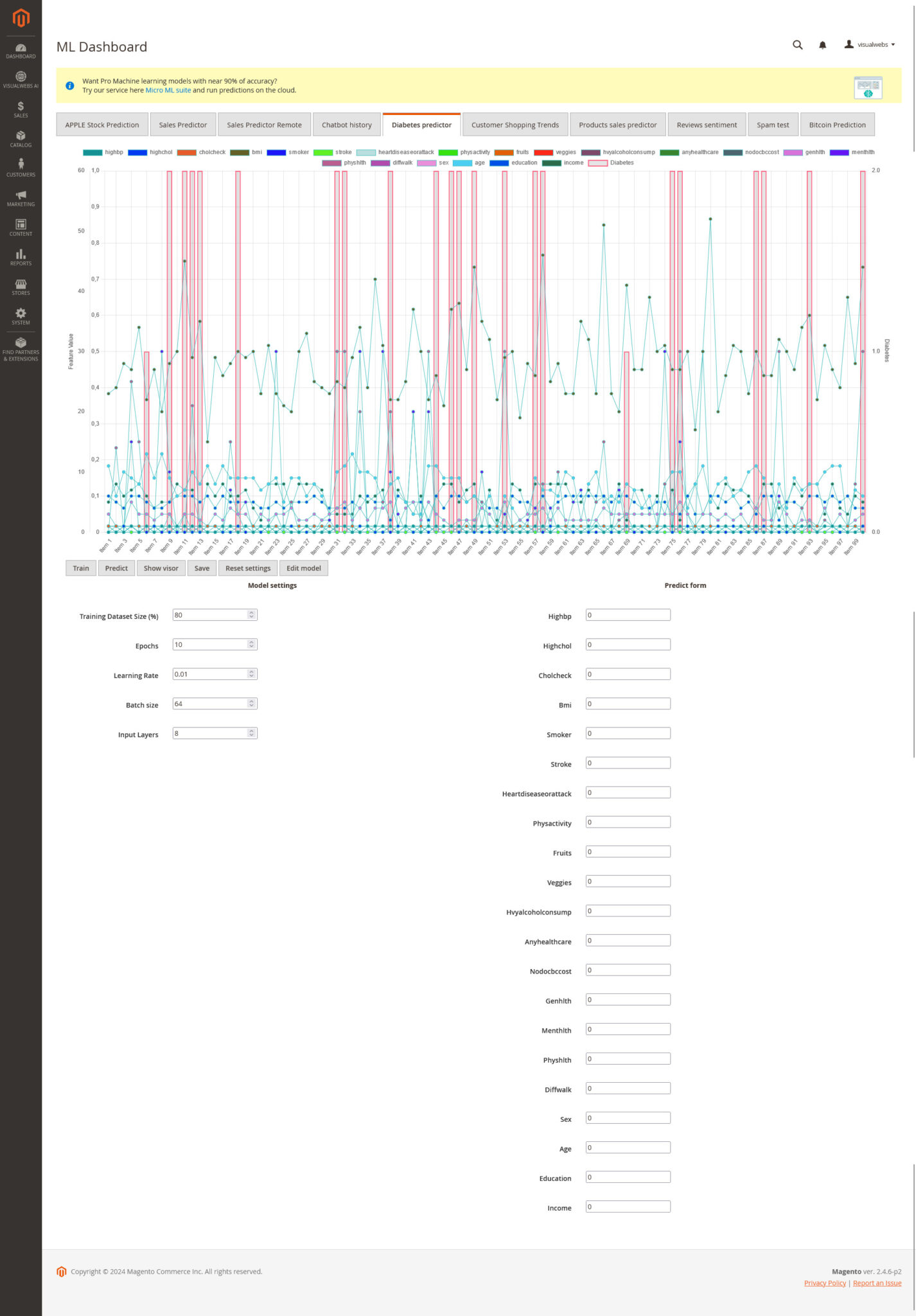

Predictions for Classifier models

Once trained and saved(optional), you need to use the form in the right for fill the input values and generate a prediction.

1. Fill all the input values for generate a prediction

2. Run predict, then a modal message shows the prediction.

Automated predictions, (PRO) feature

Embedded models with the provided javascript libraries in the pack, are enough for general predictions, but with the help of a data scientist you can receive custom models for your business.Those models are developed using python or online modeling, using services like Amazon Sagemaker, Microsoft Power BI, and the predictions are imported by the developer back to the model.

Feel free to contact us to receive a quote for a dedicated data scientist for your project.

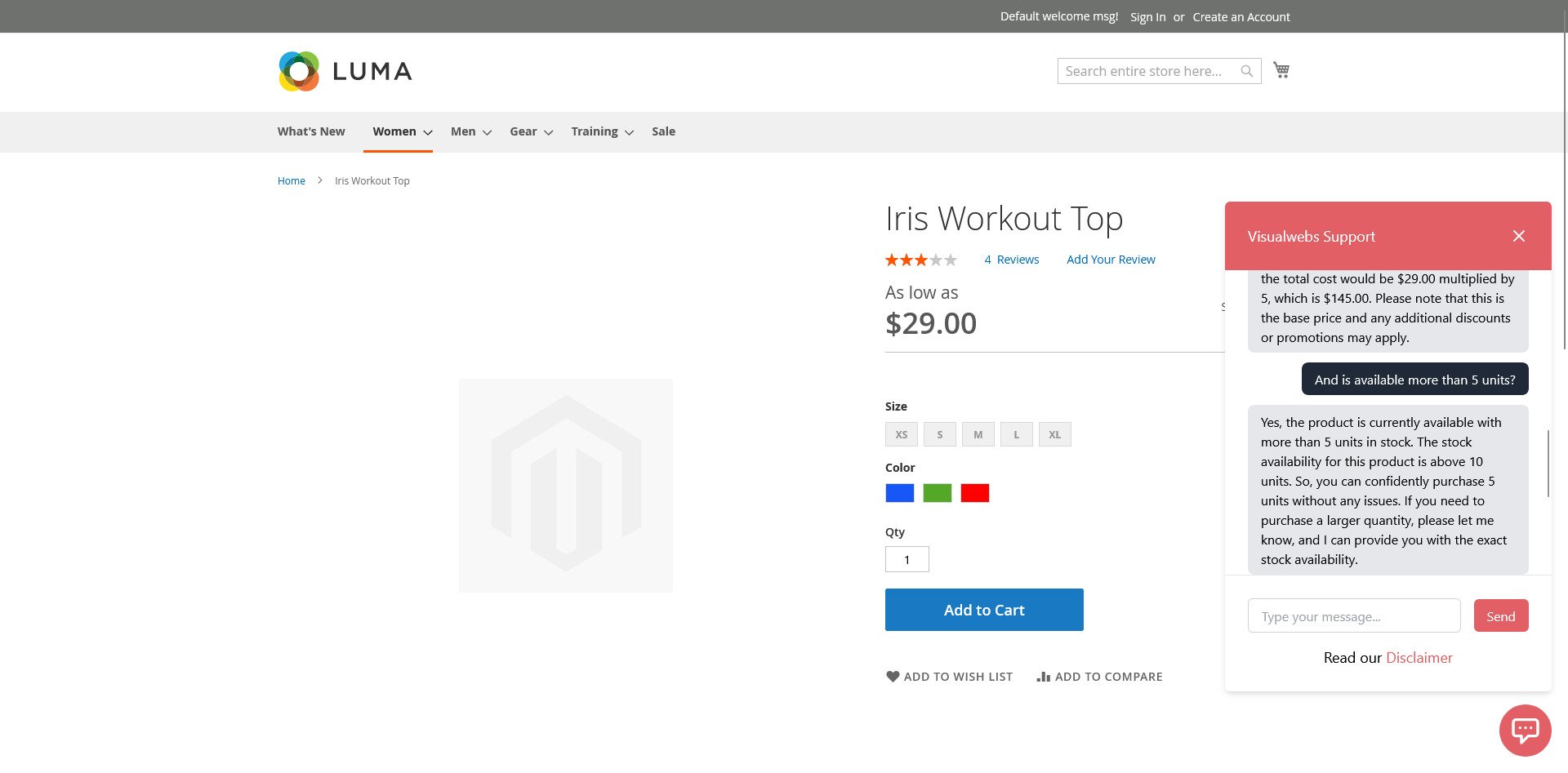

Chatbot

This system provides smart Chatbot for your site, providing advanced features like product answering about stock, description, translation and order status (Experimental feature).

Create a new model

Google Dialogflow and other NLP sytems.

This system provides multiple endpoints, currently supports Dialogflow, so users can get order status and other features. (Beta)

The endpoint you need to define in DF, is https://your-magento-domain/visualwebs_ml/callback/index

As example, the system awaits this call structure for the “order status” intent.

["queryResult"]["intent"]["displayName"]["order-status"]

and in the “parameters” Index expects information provided by the user in the chat. This is the full $_POST request you need to pass.

["queryResult"]["intent"]["displayName"]["order-status"]

["queryResult"]["parameters"]["order_id"]

["queryResult"]["parameters"]["firstname"]

["queryResult"]["parameters"]["lastname"]

["queryResult"]["parameters"]["email"]

and finally returns the Json response with the order status.

{

"fulfillmentText":"This is order status is Shipped.","fulfillmentMessages":[{"text":{"text":["This is order status is Shipped."]}}]

}

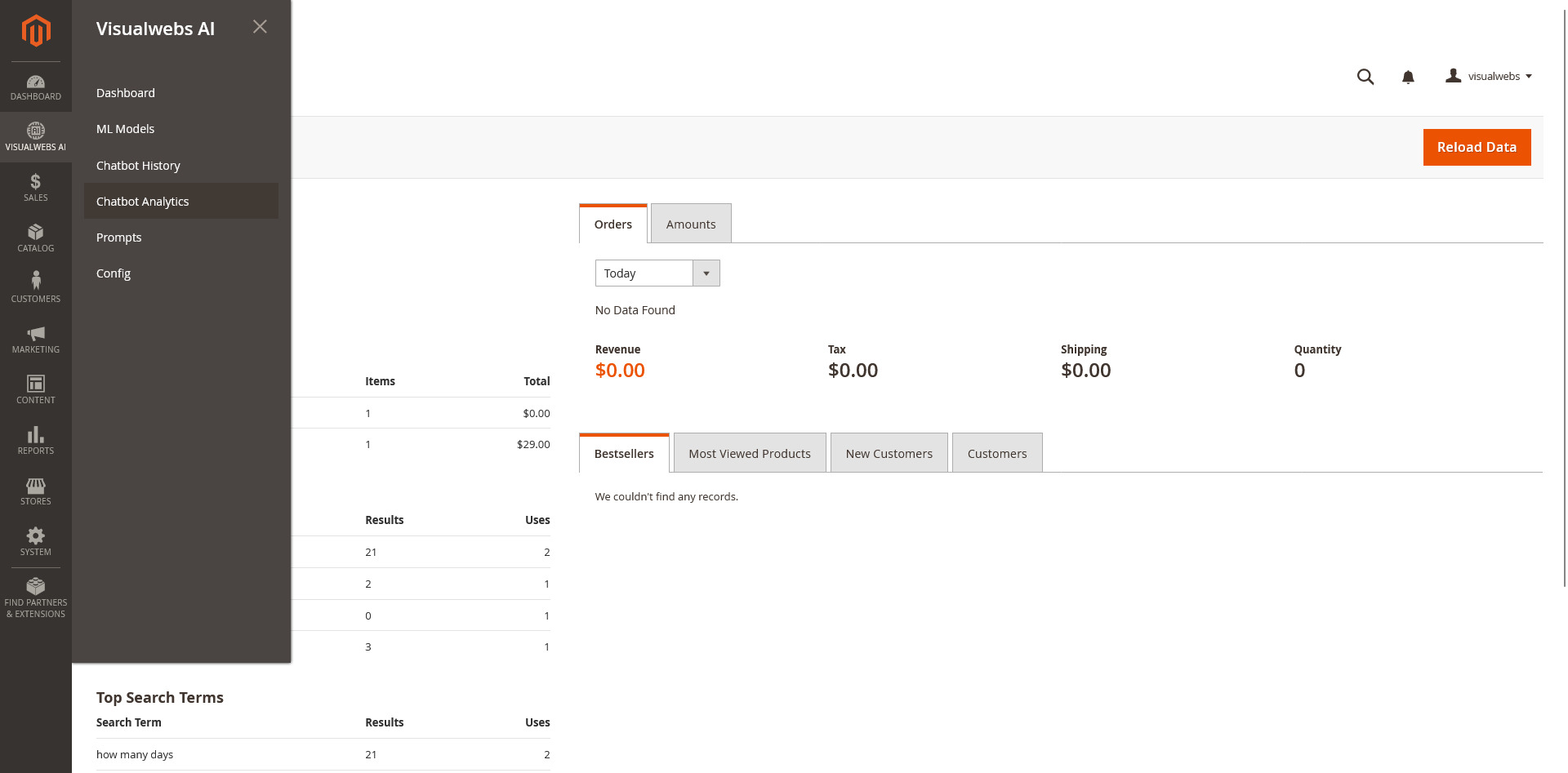

Chatbot Tools

The Extension offers Stats and Chatbot History. Start searching the Links in the backend.

Chatbot History Link

Chatbot Analytics Link

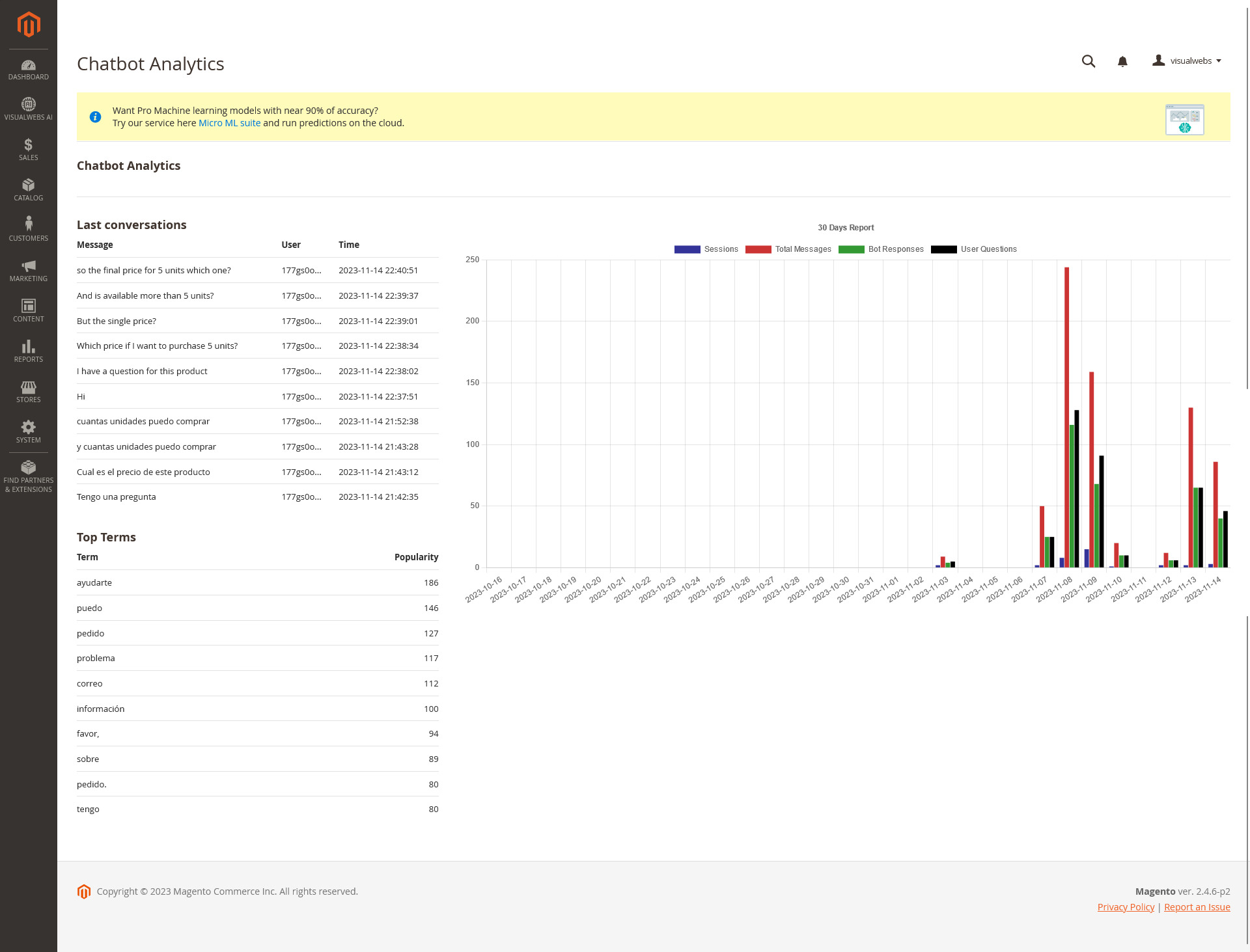

Analytics

Here you can find 3 big areas, Last conversations, Top terms and 30 days Stats.

Summary of last conversations.

Summary of terms.

Charts for Sessions, total messages, Bot responses and user questions for the last 30 days.

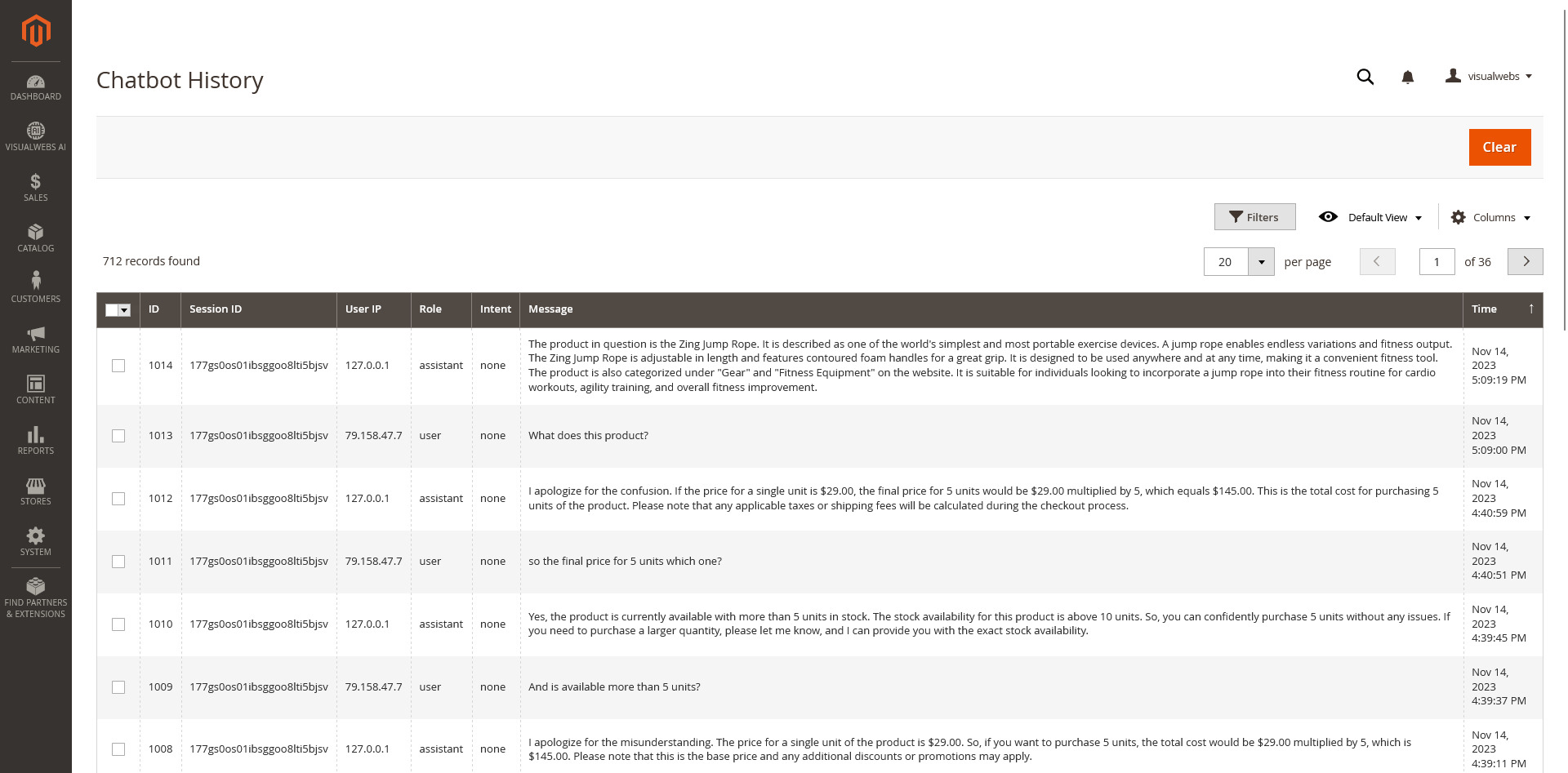

Conversation History

This grid allow check history, filter and clear completely.

Grid with full history.

Clears all the history.

Filters and pagination.

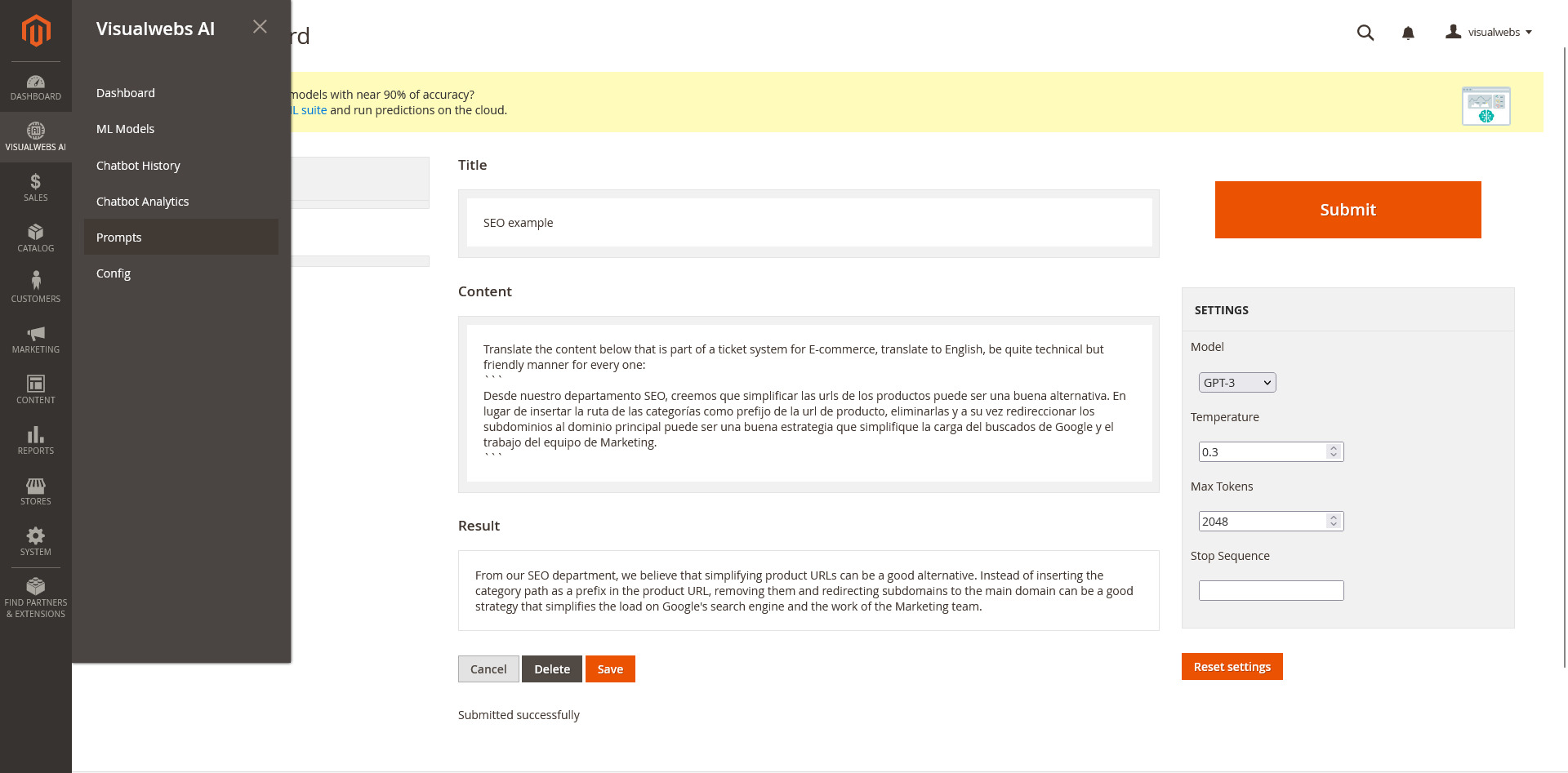

Prompts

This section describes how create Prompt templates. This allow users to create templates for sending requests to chatGPT

Find menu link

Start clicking the Prompts link

Prompts Link

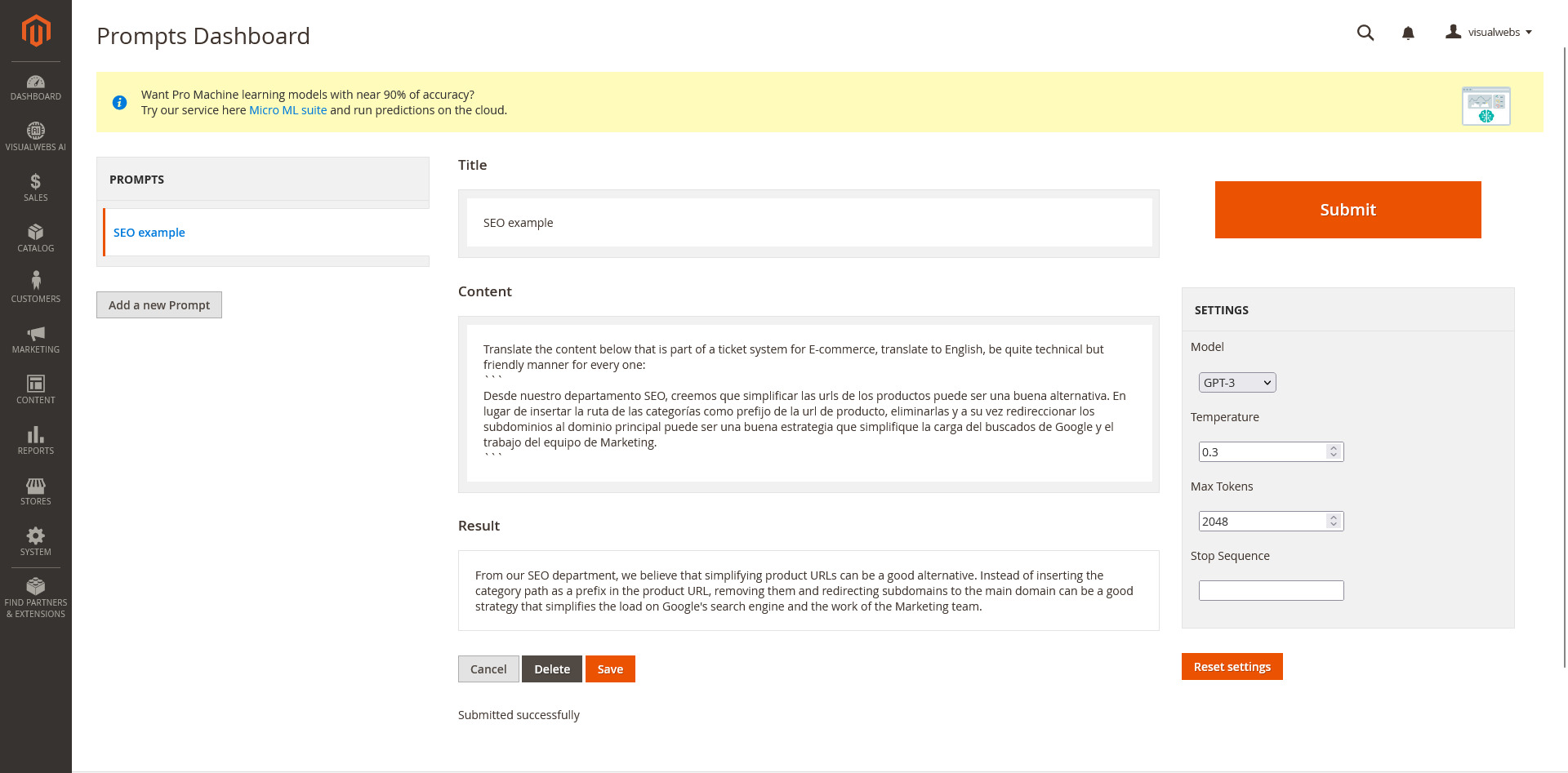

Dashboard

Please refer to this tutorial, hover flash points to see details.

Just click to preview the content on the center area.

It opens a modal for fill the title and the content.

Editable when you use the Edit button.

This is what chatGPT receives for completion.

Editable when you click on Edit button.

This is the response opeanAI provides to your content.

Edit/Cancel button to change the title or the content of the template.

Delete button for remove the template completely.

Save button to save changes for the current template.

Notification area for display save success, and another warnings.

After few seconds the result it´s visible in the "Result" area.

GTP-3 is the most used and cheap model. GPT-4 are more expensive but better models.

High levels can be better for artistic intent.

It specifies the maximum number of tokens in the response text.

Tokens can be words or even smaller units like characters, depending on the language and text.

For example, if you set "max_tokens" to 50, the response generated by the model will be limited to 50 tokens in length.

If the model reaches this token limit, it will truncate the text accordingly.

For example, you can use a stop sequence like "\n" to indicate the end of a paragraph or a conversation turn.

Leave these values if you're not sure what to fill.